Hold onto your hats, content creators and tech enthusiasts! Meta has just dropped a bombshell in the AI world, unleashing its brand new AI models: Emu Video and Emu Edit. Imagine conjuring up stunning videos and images simply by typing a few words. Sounds like magic? Well, Meta’s latest creations are here to make that magic a reality, setting the stage for an epic showdown in the generative AI arena.

Just this Thursday, Meta gave us a sneak peek into these groundbreaking tools, Emu Video and Emu Edit, offering the first tangible glimpse of the tech initially teased at Meta Connect back in September. Let’s dive into what makes these AI wonders tick!

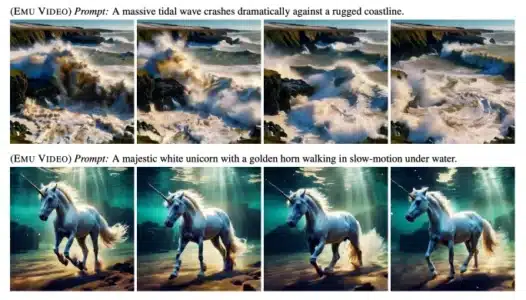

- Emu Video: Your Text, Your Video – This tool is all about turning your text prompts into captivating videos. Forget complex video editing software, just describe what you want to see, and Emu Video gets to work.

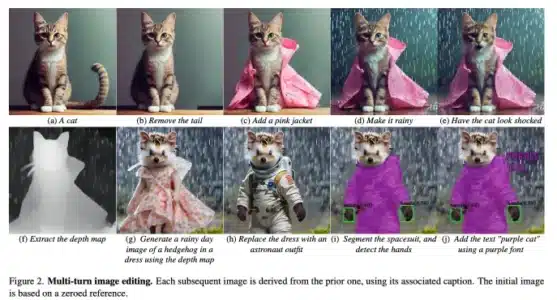

- Emu Edit: Inpainting Innovation – Emu Edit introduces a fresh approach to image editing through ‘inpainting’. Think of it as intelligent image manipulation based on your natural language instructions.

This isn’t just about cool new gadgets; Meta’s introduction of Emu Video and Emu Edit is a strategic masterstroke. They’re positioning these tools as vital components in their grand vision for the Metaverse. According to Meta, these AI assistants are designed to unlock new creative horizons for everyone, from seasoned pros crafting high-end content to everyday users eager to express their ideas in novel ways.

Emu Video, in particular, is making waves. It’s a clear signal of Meta’s serious commitment to pushing the boundaries of AI-driven content generation. Could it be a major contender against industry leaders like Runway and Pika Labs, who have been leading the charge in this exciting space? The competition is heating up!

Read Also: OpenAI Plans To Integrate ChatGPT Into Educational Settings For Classrooms

Emu Video: Text to Moving Pictures – How Does It Work?

Ever wondered how AI can translate your words into a video? Emu Video employs a smart two-step process:

- Image First: It starts by generating a still image based on your text prompt. Think of it as the AI sketching out the scene.

- Video Creation: Next, it takes that image and your original text prompt to generate a video. This clever method simplifies video creation compared to the more intricate multi-model approaches behind Meta’s previous Make-A-Video tool.

While Emu Video outputs videos at a resolution of 512×512 pixels, the magic lies in how well it understands and interprets text prompts. It’s surprisingly good at transforming text into coherent visual narratives. This ability to maintain consistency between text and visuals is what sets Emu Video apart from many existing models and commercial solutions.

Keen to try it out? Although the models themselves aren’t publicly accessible just yet, you can play around with a set of pre-selected prompts. The results are impressively smooth, with minimal jarring transitions between frames, as you can see on their demo page.

Emu Edit: Your Words are Its Image Editing Command

Not to be outdone, Emu Edit steps into the limelight as Meta’s AI-powered image editing wizard. It’s designed to perform a variety of image editing tasks simply by understanding natural language instructions. Want to remove an object? Change the background? Emu Edit offers a high degree of precision and flexibility, making image manipulation more intuitive than ever.

Meta’s research paper boldly states that “Emu Edit [is] a multi-task image editing model which sets state-of-the-art results in instruction-based image editing.” This highlights its ability to accurately execute even complex editing commands.

What’s the secret behind Emu Edit’s precision? It leverages ‘diffusers,’ a cutting-edge AI technology that gained popularity with Stable Diffusion. This advanced approach ensures that edits are seamlessly integrated while preserving the original image’s visual quality and integrity. No more clunky, unnatural-looking edits!

Meta’s Metaverse Vision: AI as the Building Block

Meta’s dedication to developing AI tools like Emu Video and Emu Edit is deeply rooted in its broader strategy to build the Metaverse. These tools are not standalone projects; they are integral components in creating immersive and interactive digital experiences. Think about it: seamlessly generating videos and editing images with AI opens up incredible possibilities for user-generated content within virtual worlds.

This commitment extends to other AI initiatives, such as Meta AI, their personal assistant powered by the powerful LLaMA-2 large language model, and the integration of multimodality in augmented reality (AR) devices. Meta is clearly betting big on AI to drive the future of digital interaction and content creation. Are Emu Video and Emu Edit just the beginning of a new era in AI-powered creativity? It certainly looks that way!

Disclaimer: The information provided is not trading advice, Bitcoinworld.co.in holds no liability for any investments made based on the information provided on this page. We strongly recommend independent research and/or consultation with a qualified professional before making any investment decisions.